I have been a teacher for 30 years, a Headteacher for 15 years and, at the age of 54, this much I know about how to use research evidence to improve both my teaching and my students’ outcomes.

In this article, I outline the steps I took from being directed to a research paper, using that research paper’s evidence to change my teaching and then how I measured the impact of that change to my teaching upon students’ outcomes. I have told aspects of this story before, but never in one place coherently, from beginning to end.

I have been teaching for over 30 years and for the first 25 I really didn’t know what I was doing; the shocking truth is that I got by on force of character and enthusiasm. It has only been in the last five years – since we became a Research School – that I have understood how to teach in a way that helps students learn effectively.

In February 2015 I was prompted to approach Alex Quigley, our erstwhile Director of Research, when I was faced with the following problem: my students’ AS mock examination results were poor – the most popular grade was a big fat U.

The frustration was that I knew they knew their Economics content. My challenge was to answer the question, How can I train my students’ thinking so that they can apply their knowledge of Economics to solve the contextual problems they face in the terminal examinations?

By then we were familiar with the Sutton Trust-Education Endowment Foundation Learning & Teaching Toolkit which rates developing students’ metacognition & self-regulation as a relatively cheap and highly effective strategy to improve students’ learning. Furthermore, Alex suggested I read a short research paper entitled: “Cognitive Apprenticeship: Teaching the Craft of Reading, Writing, and Mathematics” by Allan Collins, John Brown and Susan Newman”.[i]

The paper was illuminating. It transformed my teaching. The first section explores the characteristics of traditional apprenticeship and how they might be adapted to teach cognitive skills in schools; the second section examines three teaching methods to develop in students the metacognitive skills required for expertise in reading, writing and solving mathematical problems, and the final section outlines a framework for developing and evaluating new pedagogies in schools, based on the traditional apprenticeship model.

The paper identifies that “domain (subject) knowledge…provides insufficient clues for many students about how to actually go about solving problems and carrying out tasks in a domain. Moreover when it is learned in isolation from realistic problem contexts and expert problem-solving practices, domain knowledge tends to remain inert in situations for which it is appropriate, even for successful students”.

In order for my students to use the subject knowledge I knew they possessed, I had to teach them what Collins et al define as “Strategic knowledge: the usually tacit knowledge that underlies an expert’s ability to make use of concepts, facts, and procedures as necessary to solve problems and carry out tasks”.

I was the expert in the room. I knew subconsciously the skills required to apply my subject knowledge to solve an economics problem; the trouble was, I had not consciously taught my students those skills. What I had to do, according to the paper, was “delineate the cognitive and metacognitive processes that heretofore have tacitly comprised expertise”.

I had to find a way to apply “apprenticeship methods to largely cognitive skills”. It required “the externalization of processes that are usually carried out internally”. Ultimately, I had to develop an apprenticeship model of teaching which made my expert thinking visible.

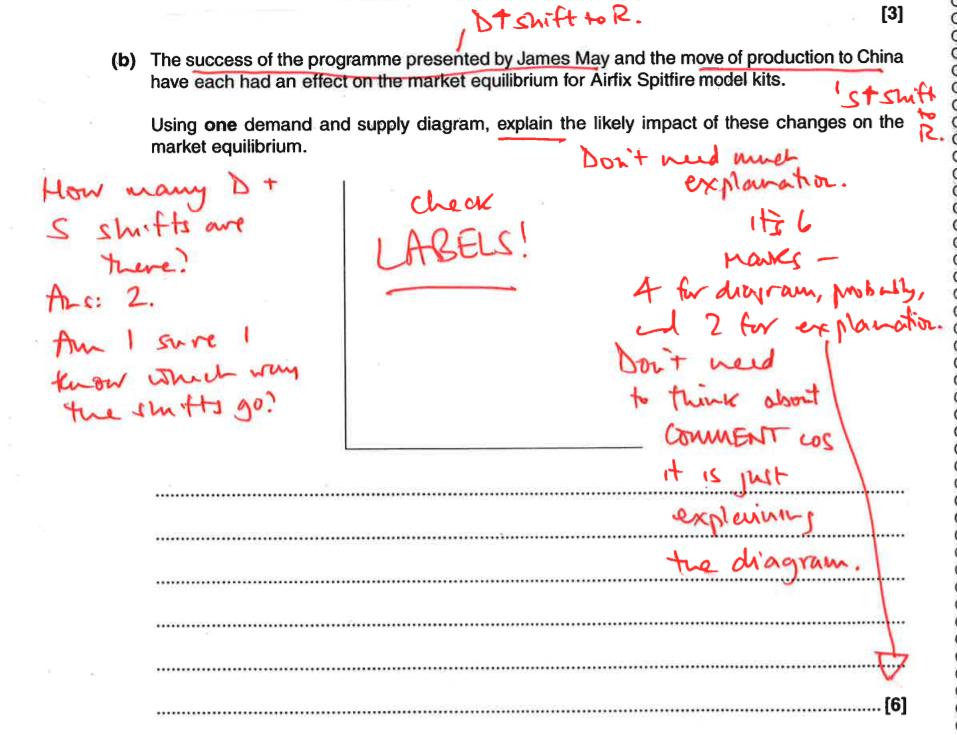

In response to the research paper, here is what I did: in the first lesson after the mocks I completed the same examination paper, not answering the questions but writing on the paper what my brain would have been saying to itself, question by question, should I have attempted the paper. I did this in front of them, live, with what I was thinking/writing projected onto the whiteboard via a visualiser.

What I wrote on the paper I insisted they wrote down verbatim on their own blank copy of the paper, a key feature of this learning experience.

The exercise showed them just how alert my brain is when I am being examined. I was teaching them, apprenticeship-style, how to apply their domain knowledge to a new context when under pressure. I was making my thinking visible.

In the second lesson after the examinations, I surprised them with a new mock paper they hadn’t seen before. They completed the paper. The numerous students who attained a U grade first time round all improved by three or more grades.

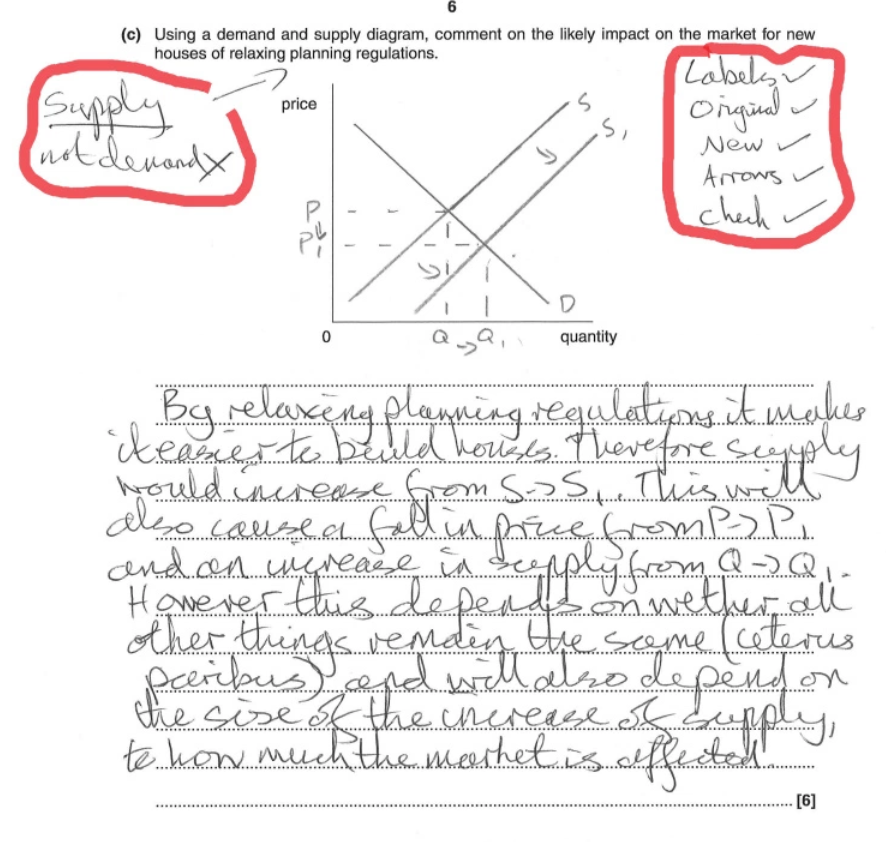

The one student who I know for sure improved precisely because of his use of the metacognition and self-regulation intervention I modelled for him was Oliver. He went from getting 24/60 and a grade U in his first paper to getting 51/60 and a grade A in his second paper. Why am I so sure it was the intervention which helped Oliver improve? Well look at how he has made explicit on paper metacognitive processes in his marginal notes. He mimicked the thinking which I modelled.

The important thing to emphasise is that the students made these impressive gains in their examinations without being taught any more Economics A level content. They improved because I taught them the mental processes required to retrieve the knowledge they had learnt from their long term memories and apply that knowledge in an efficient, precise way which answered the examination questions.

I obsess about the golden thread from intervention to students’ outcomes. Skip a year and in the summer of 2016 those same thirteen A2 Economics students surpassed themselves, attaining a grade B on average, which was 0.27 of a grade higher on average than their aspirational target grades. On the A Level Performance Systems (ALPS) the class performance was rated Outstanding.

Oliver seemed to carry those metacognitive skills with him from year 12; with a B grade target, in the final reckoning he attained an A* in Economics and grade Bs in his three other A level subjects.

As Collins et al conclude, “ultimately, it is up to the teacher to identify ways in which cognitive apprenticeship can work in his or her own domain of teaching”. Reading their paper prompted me to design a pedagogic approach which modelled explicitly my expert thinking, to the obvious benefit of my students.

But one thing troubles me: I cannot help but wonder how many more of my students could have benefitted if only I had read “Cognitive Apprenticeship” 25 years earlier.

This term I will be teaching writing from different viewpoints and perspectives to a Year 9 English class; different elements of what I have learnt about developing students’ metacognitive writing skills from the “Cognitive Apprenticeship” research paper will inform my teaching.

References

[i] The paper was first published in draft in 1987 as Technical Report No. 403 by the Center for the Study of Reading at the University of Illinois, under the title: “Cognitive Apprenticeship: Teaching the Craft of Reading, Writing, and Mathematics” by Allan Collins, BBN Laboratories, John Seely Brown, Susan Newman and the Xerox Palo Alto Research Center. It is available online at: https://www.ideals.illinois.edu/bitstream/handle/2142/17958/ctrstreadtechrepv01987i00403_opt.pdf?sequence

The final version of the paper was published in the Winter 1991 edition of the American Educator, under the title, “Cognitive Apprenticeship: Making Thinking Visible”, by Allan Collins, John Seely Brown and Ann Holum. It is available online at: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.124.8616&rep=rep1&type=pdf

Thanks John your method and reflections are really useful. I’m interested in comparing your analyses of those specific students with and average effect size of the whole class. You mentioned – Oliver went from getting 24/60 and a grade U in his first paper to getting 51/60 and a grade. Is it possible to display a spread sheet of first and after second paper scores for the whole class (removing student names of course)?

I’m just looking to get specific examples from teachers of how to measure improvements using the effect size. There has been a lot of critique of the use of that statistic and I’d be interested in a real life example.

Also, you said the students got “0.27 of a grade higher on average than their inspirational target grades”. Would you be able to explain more what that means?

Thanks

Thanks